Issue #178 - Length-based Overfitting in Transformer Models

Introduction

Neural Machine Translation (NMT) has achieved impressive progress nowadays, but there are still some challenges that remain to be explored. Many people may have noticed that neural-based MT systems normally perform worse on very long sequences. Some previous research explored the weaknesses of RNN models with respect to long sequence modeling, but similar studies have yet to be done for Transformer models. In this post, we review a paper by Variš and Bojar (2021) which suggests that this issue might be caused by the mismatch between the length distributions of training data and evaluation set.

Experiment with String Editing Operations

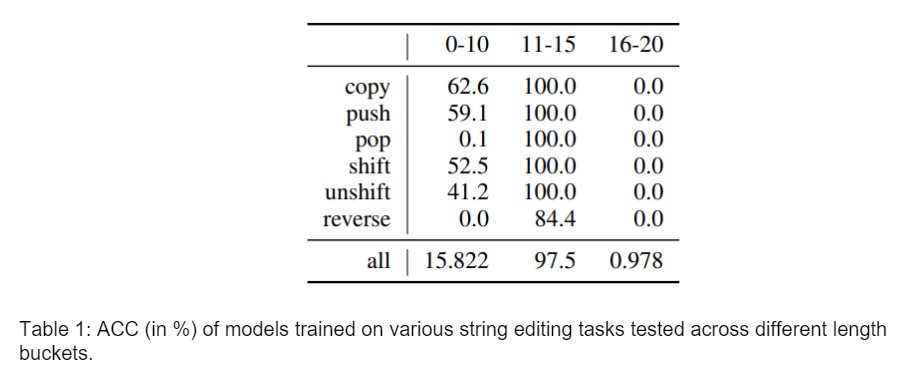

In order to directly investigate the impact of the sequence length in the training data on the model performance without any struggle in evaluation ambiguity, the first experiment focuses on building a simple model trained with sequences consisting of only two characters: 0 and 1. All sequences are unique (no duplicates) and separated by whitespaces. The goal of this model is to translate these sequences following the tasks listed below:

- copy: copy the input sequence to the output.

- unshift X: add a single character X (0 or 1) to the beginning of the sequence.

- push X: add a single character X (0 or 1) to the end of the sequence.

- shift: remove a single character from the beginning of the sequence.

- pop: remove a single character from the end of the sequence.

- reverse: reverse the character order in the input sequence.

The whole dataset is split into three buckets based on length range: 0-10, 11-15, 16-20. The model is trained only with data from the bucket range 11-15. They split the data from this range into three parts: 28K for training, 1K for validation and 1K for testing. Additionally, they sample 1K from the 0-10 range and 1K from 16-20 range for the evaluation. They train a separate model (Transformer-based) for each of the tasks mentioned above, so the training data size is the same (28K) for all the task models. All of the data for each task type is marked with a label at the beginning of each sequence on the source side: character (label name followed by 0, 1 for unshift and push, − for others), and a separator (|).

The model is evaluated by accuracy ACC = num_correct/num_examples. Table 1 shows the results on each task across different length buckets. We can see that the model produces high accuracy on the test set from the same length range as the training data, while the performance drops dramatically on the other buckets.

Experiment with MT in Real Scenario

Experiment with MT in Real Scenario

To test whether the findings from the first experiment is aligned with real scenario MT, the authors also train a Transformer-based Czech-English MT system following the similar pattern. After the tokenization and BPE segmentation, the whole dataset is split into buckets of sizes 1-10, 11-20, ..., 91-100 based on the sequence length of the target side. Table 2 shows the sizes of the respective training corpora.

A separate model is trained for each length bucket. All models are evaluated on test sets from different length buckets using sacreBLEU metric. These test data are extracted from the concatenation of WMT2020. According to the top part of Figure 1, it is obvious that the models perform better when translating data of target-side length similar to the training length bucket. This confirms that the model overfits to the length of the training data, it significantly affects the performance on those sequences from the buckets that are far from the training one. Note that the results across different test buckets are not directly comparable as they are different data sets. The bottom part of Figure 1 shows the average ratio between a hypothesis and reference. Systems trained with smaller length buckets produce shorter outputs, while the systems trained with bigger buckets can produce up to 10x longer outputs. Similar to Kondo et al. (2021), the authors also try to improve model performance on very long sentences by adding more synthetic data which is created by concatenating shorter training sequences. The result shows that the synthetic data can lead to a performance similar to the model trained on the genuinely longer sentences.

A separate model is trained for each length bucket. All models are evaluated on test sets from different length buckets using sacreBLEU metric. These test data are extracted from the concatenation of WMT2020. According to the top part of Figure 1, it is obvious that the models perform better when translating data of target-side length similar to the training length bucket. This confirms that the model overfits to the length of the training data, it significantly affects the performance on those sequences from the buckets that are far from the training one. Note that the results across different test buckets are not directly comparable as they are different data sets. The bottom part of Figure 1 shows the average ratio between a hypothesis and reference. Systems trained with smaller length buckets produce shorter outputs, while the systems trained with bigger buckets can produce up to 10x longer outputs. Similar to Kondo et al. (2021), the authors also try to improve model performance on very long sentences by adding more synthetic data which is created by concatenating shorter training sequences. The result shows that the synthetic data can lead to a performance similar to the model trained on the genuinely longer sentences.

Figure 1: Top: Varying performance of Transformers on test data trained only on the data from a specific target side length bucket (various lines) when evaluated on a specific test bucket (x-axis). Bottom: Average ratio between a hypothesis and reference. Dashed line indicates a ratio of 1.0.

Figure 1: Top: Varying performance of Transformers on test data trained only on the data from a specific target side length bucket (various lines) when evaluated on a specific test bucket (x-axis). Bottom: Average ratio between a hypothesis and reference. Dashed line indicates a ratio of 1.0.

In summary

The length-controlled experiments by Variš and Bojar (2021) demonstrate that Transformer models can generalize very well to unseen examples within the same length bucket but fail at similar tasks from different length buckets, shorter or longer. The results imply that the power of Transformer models may come from its ability to exploit the similarities between training and evaluation data.